【2022】데이터 추출에 대한 최고의 웹 스크래핑 도구 Top 10 | 웹 스크래핑 툴 | ScrapeStorm

개요:This article will introduce the top10 best web scraping tools in 2019. They are ScrapeStorm, ScrapingHub, Import.io, Dexi.io, Diffbot, Mozenda, Parsehub, Webhose.io, Webharvy, Outwit. ScrapeStorm무료 다운로드

Web scraping tools are designed to grab the information needed on the website. Such tools can save a lot of time for data extraction.

Here is a list of 10 recommended tools with better functionality and effectiveness.

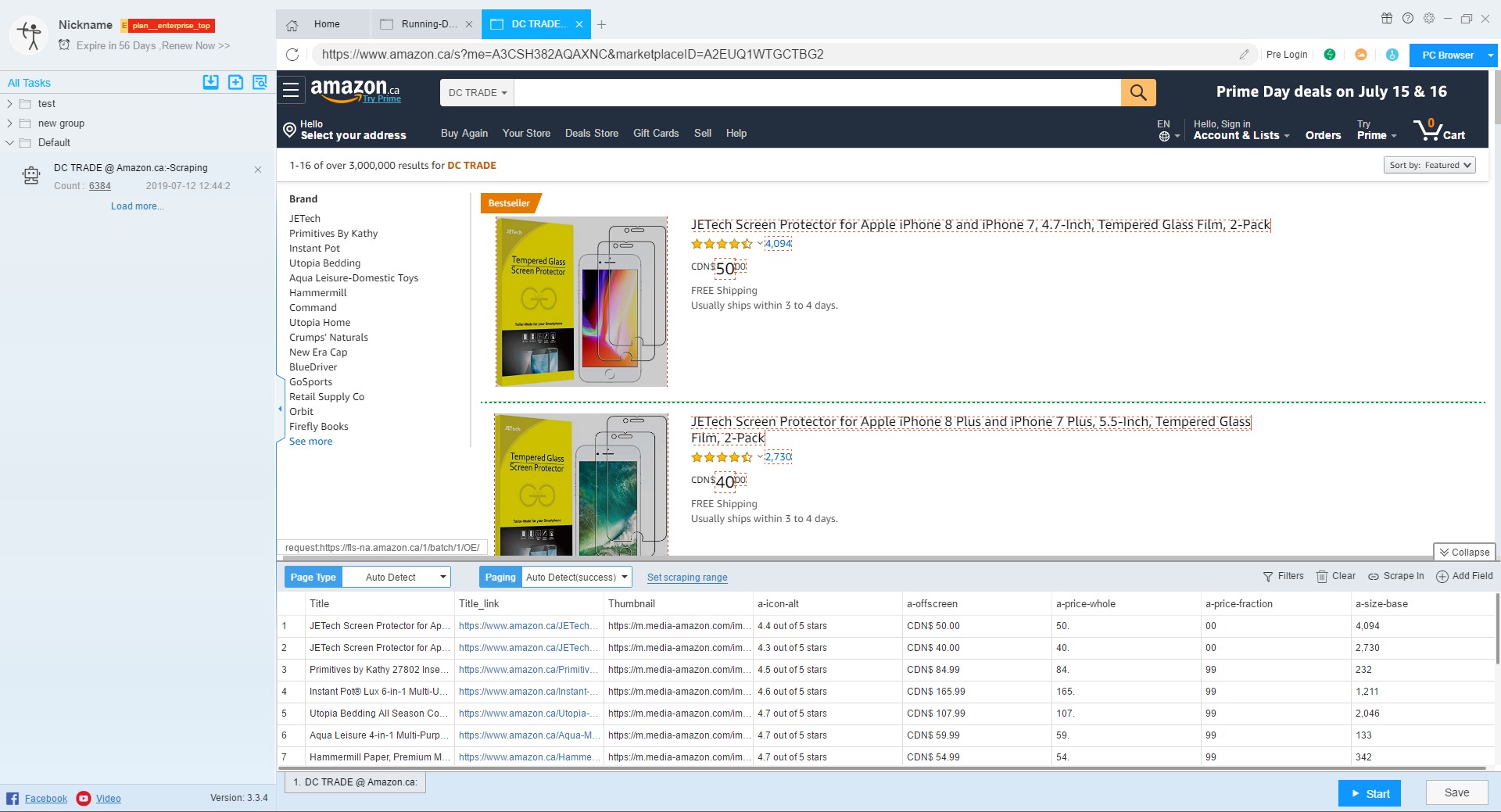

1. ScrapeStorm

ScrapeStorm is an AI-Powered visual web scraping tool,which can be used to extract data from almost any websites without writing any code.

It is powerful and very easy to use. You only need to enter the URLs, it can intelligently identify the content and next page button, no complicated configuration, one-click scraping.

ScrapeStorm is a desktop app available for Windows, Mac, and Linux users. You can download the results in various formats including Excel, HTML, Txt and CSV. Moreover, you can export data to databases and websites.

Features:

1) Intelligent identification

2) IP Rotation and Verification Code Identification

3) Data Processing and Deduplication

4) File Download

5) Scheduled task

6) Automatic Export

7) RESTful API and Webhook

8) Automatic Identification of E-commerce SKU and big images

Pros:

1) Easy to use

2) Fair price

3) Visual point and click operation

4) All systems supported

Cons:

No cloud services

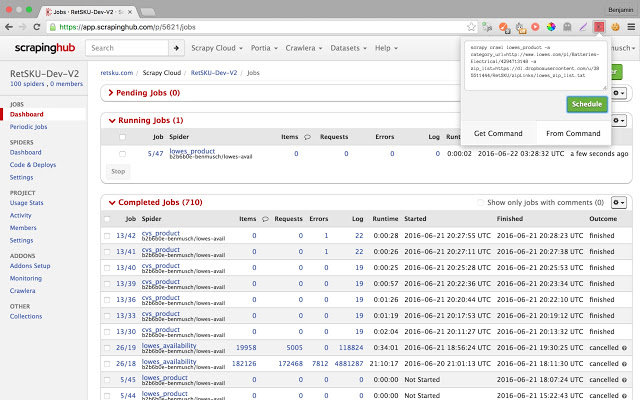

2.ScrapingHub

Scrapinghub is the developer-focused web scraping platform to offer several useful services to extract structured information from the Internet.

Scrapinghub has four major tools – Scrapy Cloud, Portia, Crawlera, and Splash.

Features:

1) Allows you to converts the entire web page into organized content

2) JS on-page support toggle

3) Handling Captchas

Pros:

1) Offer a collection of IP addresses covered more than 50 countries which is a solution for IP ban problems

2) The temporal charts were very useful

3) Handling login forms

4) The free plan retains extracted data in cloud for 7 days

Cons:

1) No Refunds

2) Not easy to use and needs to add many extensive add-ons

3) Can not process heavy sets of data

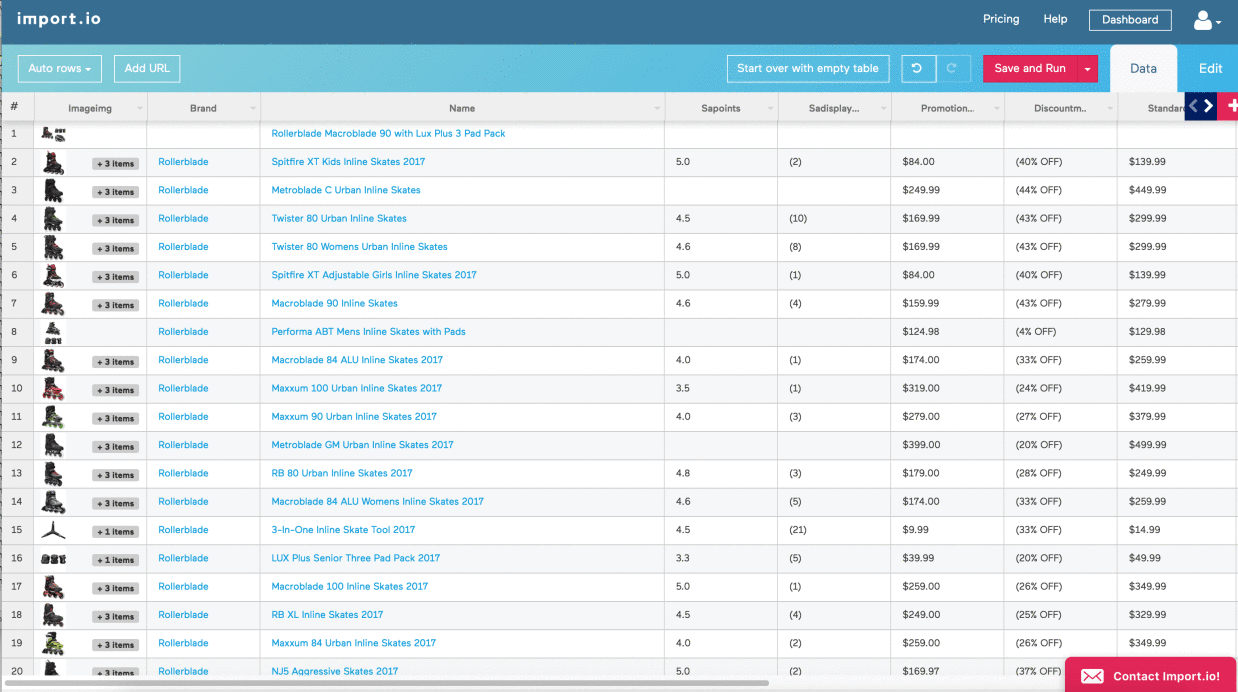

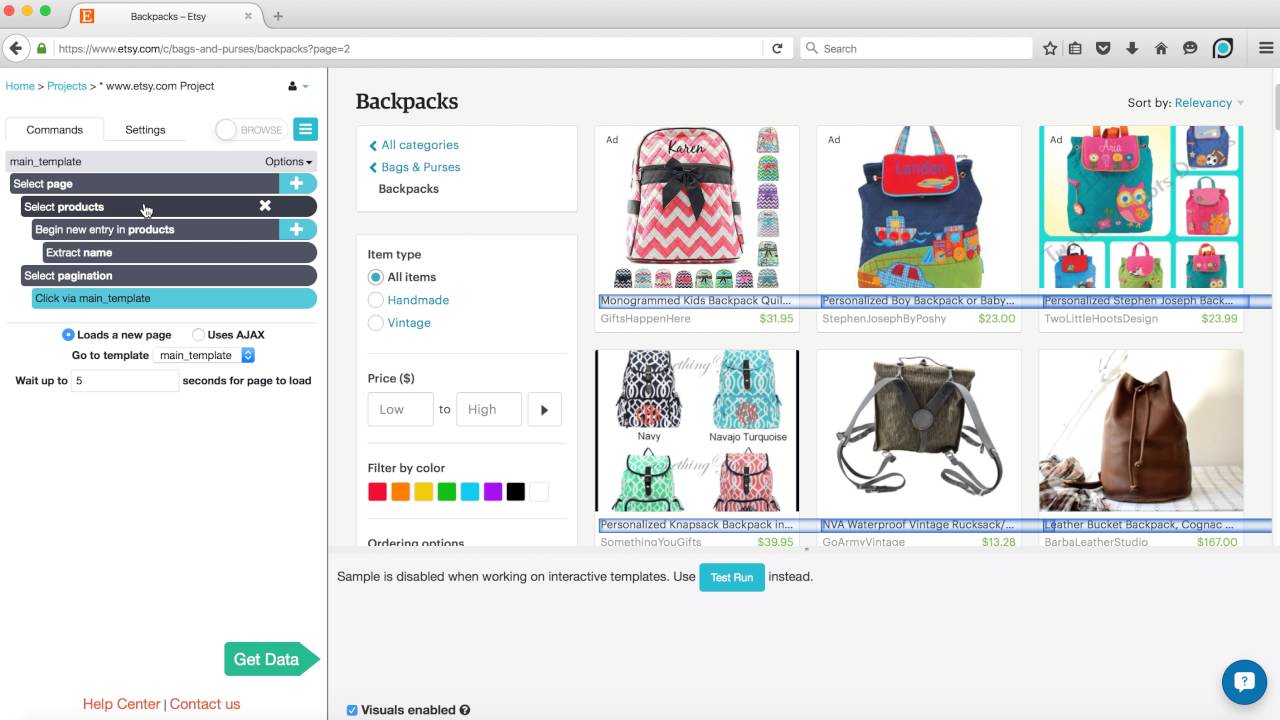

3.Import.io

Import.io is a platform which facilitates the conversion of semi-structured information in web pages into structured data, which can be used for anything from driving business decisions to integration with apps and other platforms.

They offer real-time data retrieval through their JSON REST-based and streaming APIs, and integration with many common programming languages and data analysis tools.

Features:

1) Point-and-click training

2) Automate web interaction and workflows

3) Easy Schedule data extraction

Pros:

1) Support almost every system

2) Nice clean interface and simple dashboard

3) No coding required

Cons:

1) Overpriced

2) Each sub-page costs credit

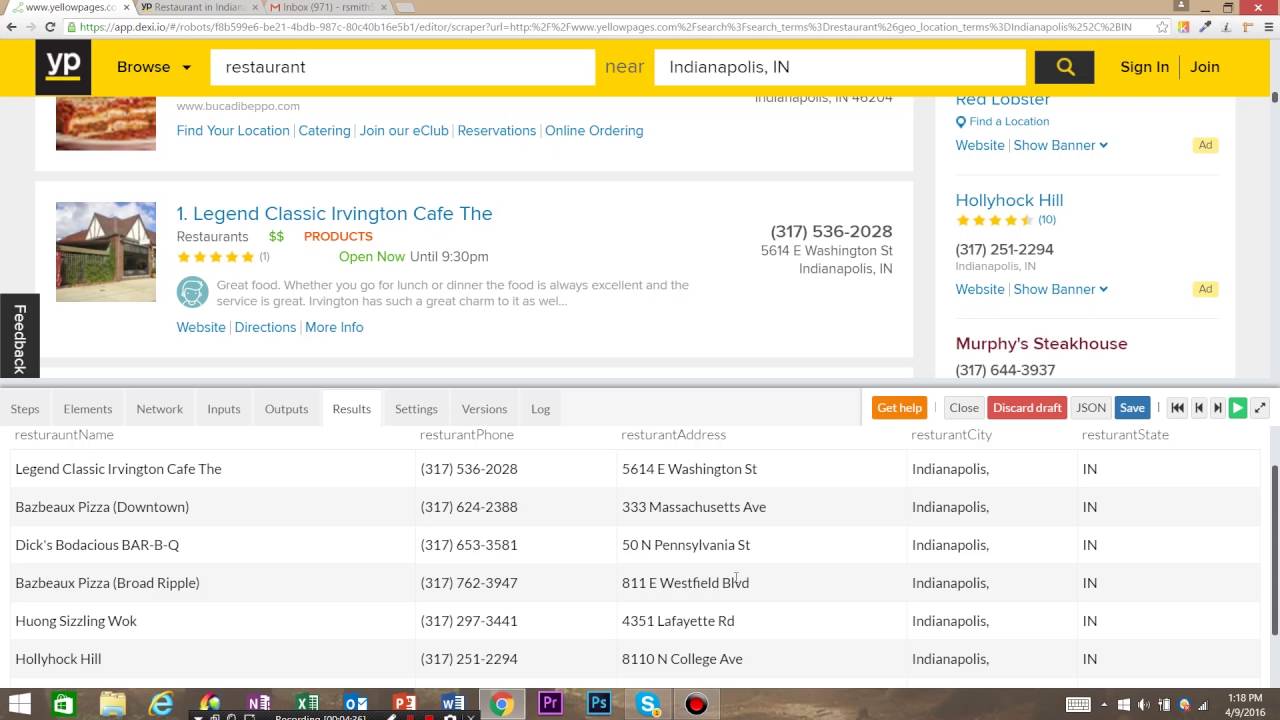

4.Dexi.io

Web Scraping & intelligent automation tool for professionals. Dexi.io is the most developed web scraping tool which enables businesses to extract and transform data from any web source through with leading automation and intelligent mining technology.

Dexi.io allows you to scrape or interact with data from any website with human precision. Advanced feature and APIs helps you transform and combine data into powerfull datasets or solutions.

Features:

1) Provide several integrations out of the box

2) Automatically de-duplicate data before sending it to your own systems.

3) Provide the tools when robots fail

Pros:

1) No coding required

2) Agents creation services available

Cons:

1) Difficult for non-developers

2) Trouble in Robot Debugging

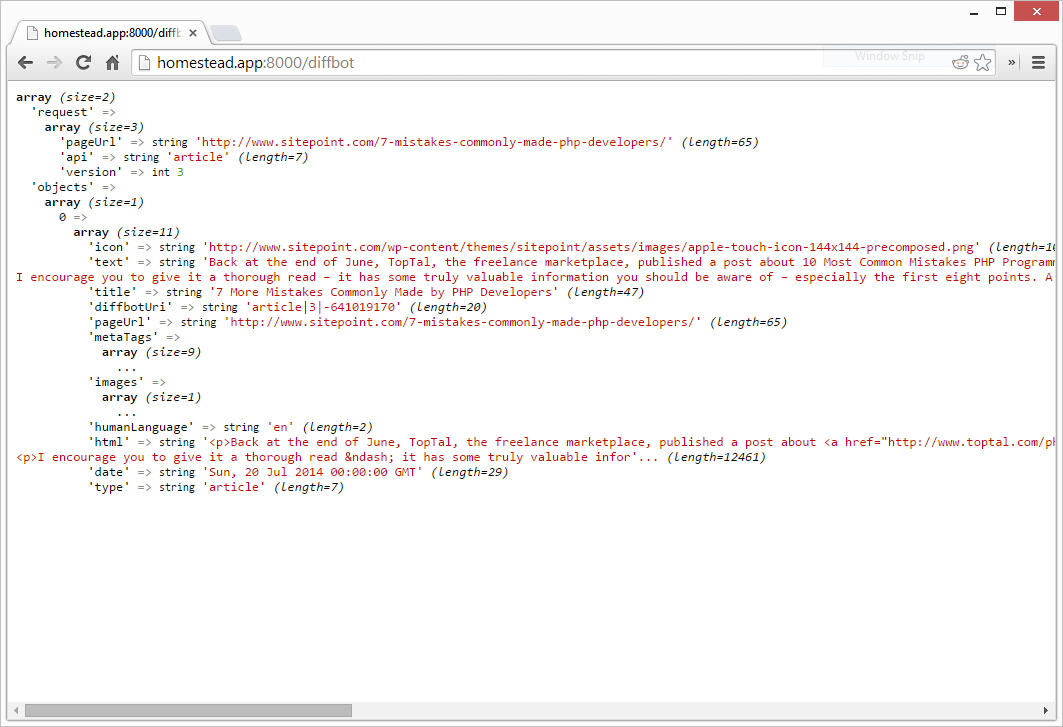

5.Diffbot

Diffbot allows you to get various type of useful data from the web without the hassle. You don’t need to pay the expense of costly web scraping or doing manual research. The tool will enable you to exact structured data from any URL with AI extractors.

Features:

1) Query with a Powerful, Precise Language

2) Offers multiple sources of data

3) Provide support to extract structured data from any URL with AI Extractors

4) Comprehensive Knowledge Graph

Pros:

1) Can discover relationship between entities

2) Batch Processing

3) Can query and get the exact answers you need

Cons:

1) Initial output is complex

2) Require a lot of cleaning before being usable

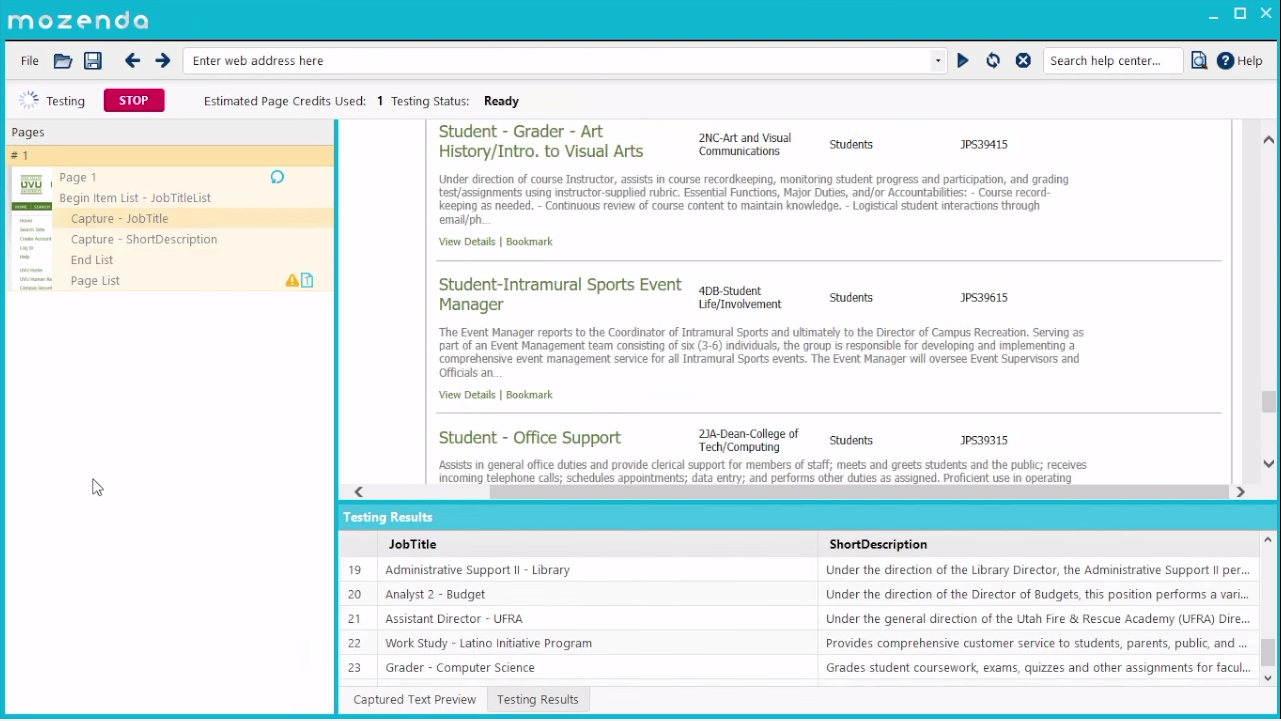

6.Mozenda

Mozenda provides technology, delivered as either software (SaaS and on-premise options) or as a managed service, that allows people to capture unstructured web data, convert it into a structured format, then “publish and format it in a way that companies can use.”

Mozenda Provides: 1) Cloud-hosted software 2) On-premise software 3) Data Services Over 15 years of experience, Mozenda enables you to automate web data extraction from any website.

Features:

1) Scrape websites through different geographical locations.

2) API Acces

3) Point-and-click interface

4) Receive email alerts when agents run successfully

Pros:

1) Visual interface

2) Comprehensive Action Bar

3) Multi-threaded extraction and smart data aggregation

Cons:

1) Unstable when dealing with large websites

2) A bit expensive

7.ParseHub

ParseHub is a visual data extraction tool that anyone can use to get data from the web. You’ll never have to write a web scraper again and can easily create APIs from websites that don’t have them. ParseHub can handle interactive maps, calendars, search, forums, nested comments, infinite scrolling, authentication, dropdowns, forms, Javascript, Ajax and much more with ease. ParseHub offer both a free plan for everyone and custom enterprise plans for massive data extraction.

Features:

1) Scheduled Runs

2) Automatic IP rotation

3) Interactive websites (AJAX & JavaScript)

4) Dropbox integration

5) API & Web-hooks

Pros:

1) Dropbox, S3 integration

2) Support multiple systems

3) Data aggregation from multiple websites

Cons:

1) Free Program Limited

2) Complex user interface

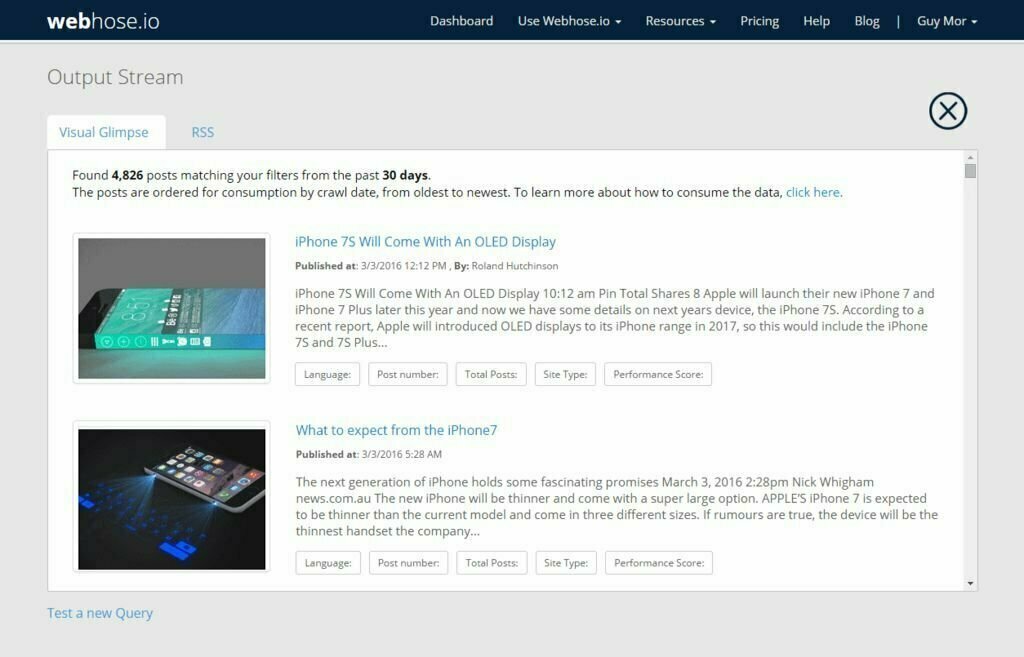

8.Webhose.io

The Webhose.io API provides easy to integrate, high quality data and meta-data, from hundreds of thousands of global online sources like message boards, blogs, reviews, news and more.

Available either by query based API or via firehose, Webhose.io API provides low latency with high coverage data, with an efficient dynamic ability to add new sources at record time.

Features:

1) Get structured, machine-readable datasets in JSON and XML formats

2) Helps you to access a massive repository of data feeds without paying any extra fees

3) Can conduct granular analyze

Pros:

1) Query system is simple to use and consistent across data providers

2) Consistent across data providers

Cons:

1) Has a bit of learning curve

2) Not for businesses and Enterprises

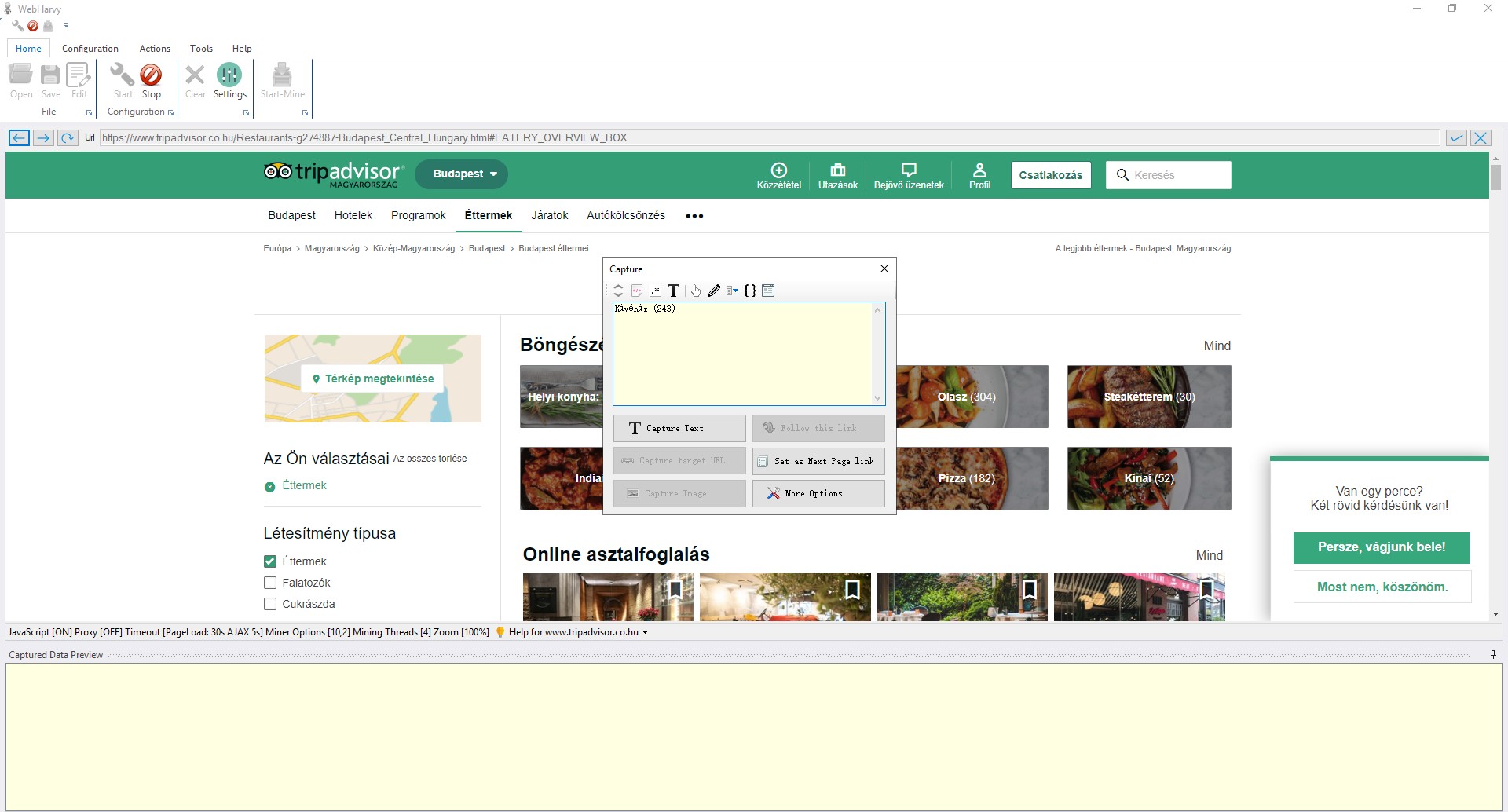

9.WebHarvy

WebHarvy lets you easily extract data from websites to your computer. No programming/scripting knowledge required, WebHarvy works with all websites. You may use WebHarvy to extract data from product listings/eCommerce websites, yellow pages, real estate listings, social networks, forums etc. WebHarvy lets you select the data which you need using mouse clicks, its incredibly easy to use. Scrapes data from multiple pages of listings, following each link.

Features:

1) Point and click interface

2) Safeguard Privacy

Pros:

1) Visual interface

2) No coding required

Cons:

1) Slow speed

2) May lose data after several days of scrapping

3) Scrapping stop from time to time

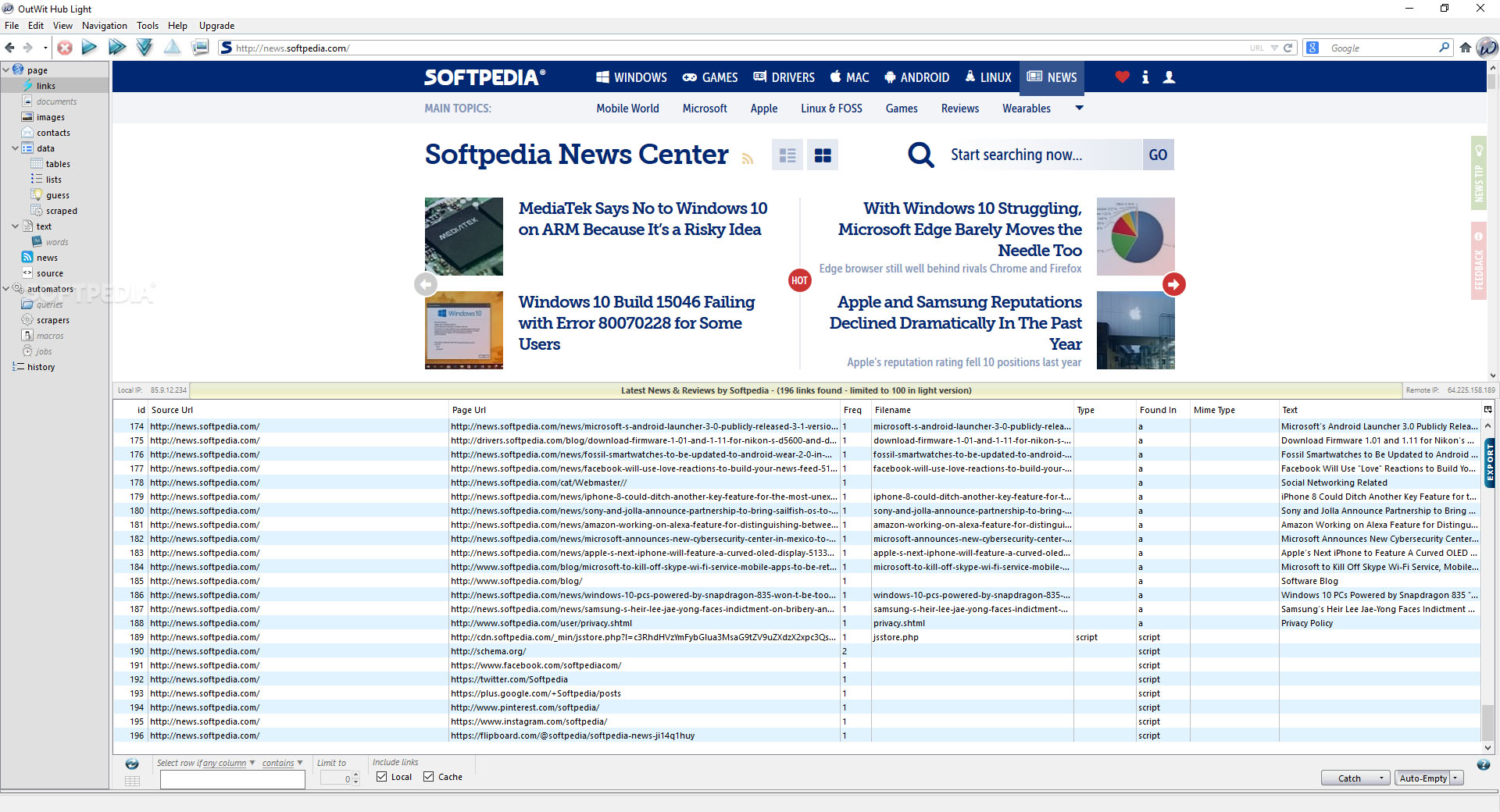

10. Outwit

OutWit Hub is a Web data extraction software application designed to automatically extract information from online or local resources. It recognizes and grabs links, images, documents, contacts, recurring vocabulary and phrases, rss feeds and converts structured and unstructured data into formatted tables which can be exported to spreadsheets or databases.

Features:

1) Recognition and extraction of links, email addresses, structured & non-structured data, RSS news

2) Extraction & download of images and documents

3) Automated browsing with user-defined Web exploration rules

4) Macro automation

5) Periodical job execution

Pros:

1) No coding required

2) simplistic graphic user interface

Cons:

1) Lack of a point-and-click interface

2) Tutorials need to be improved

11. Scraping-Bot.io

Scraping-Bot.io is an efficient tool to scrape data from a URL. It works particularly well on product pages where it collects all you need to know: image, product title, product price, product description, stock, delivery costs, EAN, product category, brand, colour, etc… You can also use it to check your ranking on google and improve your SEO. Use the Live test on their Home page to test without coding.

Features:

1) JS rendering (Headless Chrome)

2) High quality proxies

3) Full Page HTML

4) Geotargeting

Pros:

1) Allows for large bulk scraping needs

2) Free basic usage monthly plan

3) Parsed data for ecommerce product pages (price, currency, EAN, etc.)

Cons:

1) Not adapted for non-developers

2) API: No user interface

면책 성명: 이 글은 우리 사용자에 의해 기여되었습니다. 침해가 발생한 경우 즉시 제거하도록 조언해 주세요.